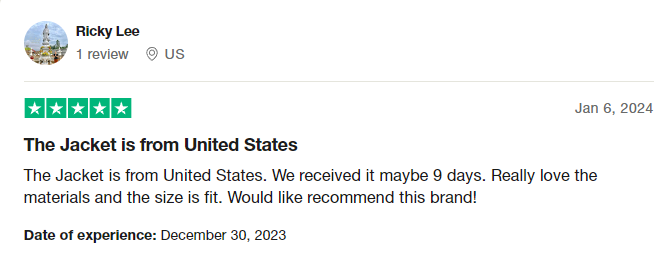

Cat the sound of silence Shirt

Automatic Classification of Cat Vocalizations Emitted in Different ContextsStavros Ntalampiras,1,*† Luca Andrea Ludovico,1,† Giorgio Presti,1,† Emanuela Prato Previde,2,† Monica Battini,3,† Simona Cannas,3,† Clara Palestrini,3,† and Silvana Mattiello3,†Author information Article notes Copyright and License information DisclaimerThis article has been cited by other articles in PMC.Go to:AbstractSimple Summaryvocalizations are their basic means of communication. They are particularly important in assessing their welfare status since they are indicative of information associated with the environment they were produced, the animal’s emotional state, etc. As such, this work proposes a fully automatic framework with the ability to process such vocalizations and reveal the context in which they were produced. To this end, we used suitable audio signal processing and pattern recognition algorithms. We recorded vocalizations from Maine Coon and European Shorthair breeds emitted in three different contexts, namely waiting for food, isolation in unfamiliar environment, and brushing. The obtained results are excellent, rendering the proposed framework particularly useful towards a better understanding of the acoustic communication between humans and cats.

Cat the sound of silence Shirt

AbstractCats employ vocalizations for communicating information, thus their sounds can carry a wide range of meanings. Concerning vocalization, an aspect of increasing relevance directly connected with the welfare of such animals is its emotional interpretation and the recognition of the production context. To this end, this work presents a proof of concept facilitating the automatic analysis of cat vocalizations based on signal processing and pattern recognition techniques, aimed at demonstrating if the emission context can be identified by meowing vocalizations, even if recorded in sub-optimal conditions. We rely on a dataset including vocalizations of Maine Coon and European Shorthair breeds emitted in three different contexts: waiting for food, isolation in unfamiliar environment, and brushing. Towards capturing the emission context, we extract two sets of acoustic parameters, i.e., mel-frequency cepstral coefficients and temporal modulation features. Subsequently, these are modeled using a classification scheme based on a directed acyclic graph dividing the problem space. The experiments we conducted demonstrate the superiority of such a scheme over a series of generative and discriminative classification solutions. These results open up new perspectives for deepening our knowledge of acoustic communication between humans and cats and, in general, between humans and animals.